Implementation of the See Far solution

The smart glasses consists of the three following modules:

• the Personalized Visual Assistant,

• the Personalized visual recommendation service.

• the Embedded Navigation System.

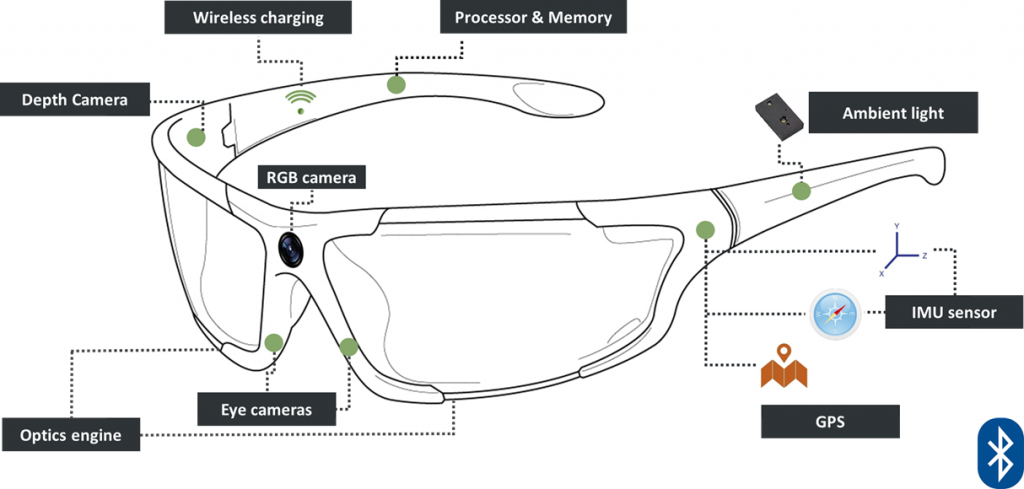

The Personalized Visual Assistant will take as input the condition of the eye, the focal point of the eye, as well as the environmental conditions and will adapt the Display Lenses (see through display) in order older adult to have the optimal view of the objects and the space around. For example, Personalized Visual Assistant can act as electronic magnifying system for people in need or simply as light sensitivity adapter. The functionality of the Personalized Visual Assistant is based on two components: i) the Intelligent component analyzing the information received by the sensors integrated

in the smart glasses and the See Far mobile application (i.e. evolution of vision deficiency) and deciding the vision adjustment mechanism that will be activated and ii) the Augmented reality component that hosts all the augmented reality mechanisms that are developed in order the vision deficiencies to be addressed (contrast enhancement, brightness enhancement, color filtering, outline filtering, focus adjustment etc.), the visual recommendations and navigation guidelines to be provided.

The Personalized visual recommendation service will base its functionality on the combination of the information that are provided by the Visual impairments detection and monitoring service (i.e. prediction of the risk diabetes, cardiovascular or other disease to be presented) and the information that are received by the smart glasses sensors and express the behavior of the user. The combination of the information will lead to the creation of the personalized profile of the user (Personalized profile component) and will determine the augmented reality mechanism that will be activated

in order the appropriate recommendation, suggestions and motivations (Augmented reality component) to be provided to the user.

The Augmented reality component, receives input from the sensors integrated in the See Far smart glasses in order to interpret what the user sees (Scene interpretation), to create the augmented reality scene (AR scene generation) and to combine the real and augmented scene (Combination of the two scenes) creating thus the augmented environment that will be presented to the user.

The Embedded Navigation System will provide to the users all the necessary information to anticipate and overcome any restrictions and barriers that prevent users from making full and independent use of the workplace environment.

The Display lenses, consists the mean of transferring to the users all the necessary visual information by being adapted to the workplace environment conditions and user needs. In order the above mentioned to be accomplished, a set of sensors will be integrated in the smart glasses providing the necessary information to the main components of the smart glasses.

The See Far mobile application will host the Impairments detection and monitoring service which allows the monitoring of the central vision evolution and the prediction of diseases. It will analyze retinal images (Image Analysis Component) and will detect the type and the stage of vision impairment (Decision Support Component). The retinal images will be recorded by the ophthalmic camera, easily attached to a smartphone. More specifically, color digital

images and videos of the retina can be obtained, encompassing the posterior lobe, including the macula, optic disk and peripheral retina. The detection of changes between the outputs of the Decision Support Component, in specific time intervals, will allow the monitoring of the progress of the disease(s).

All the publications